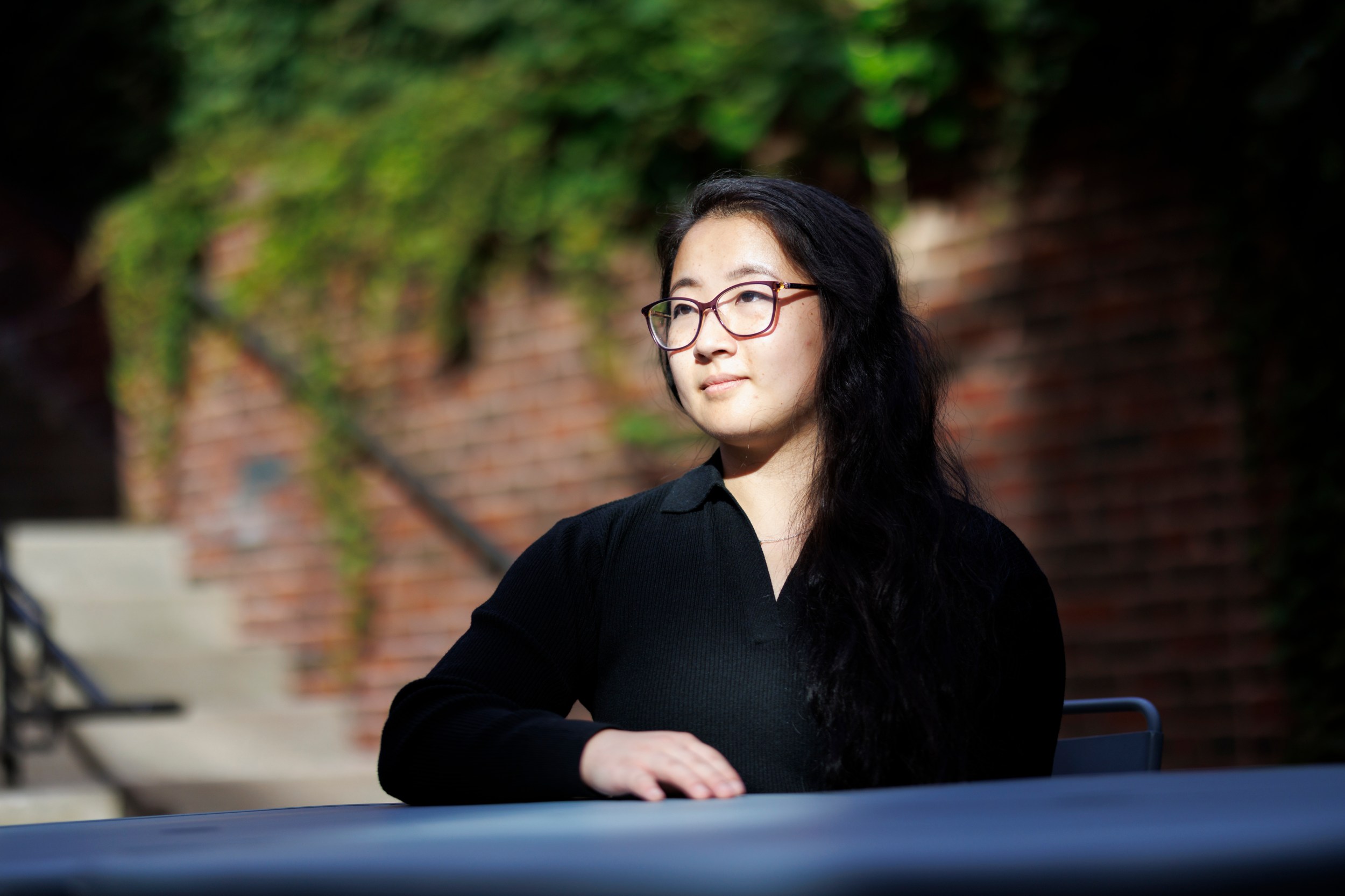

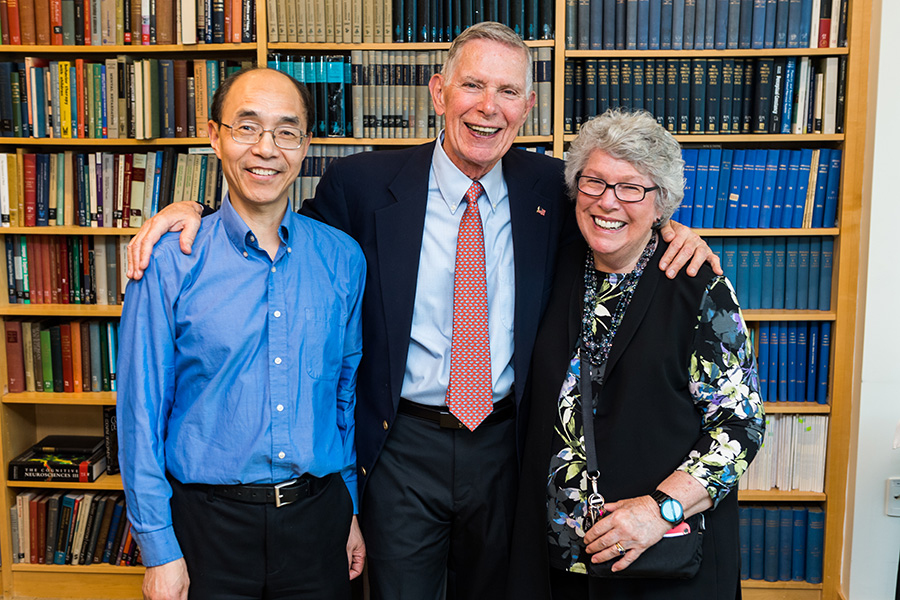

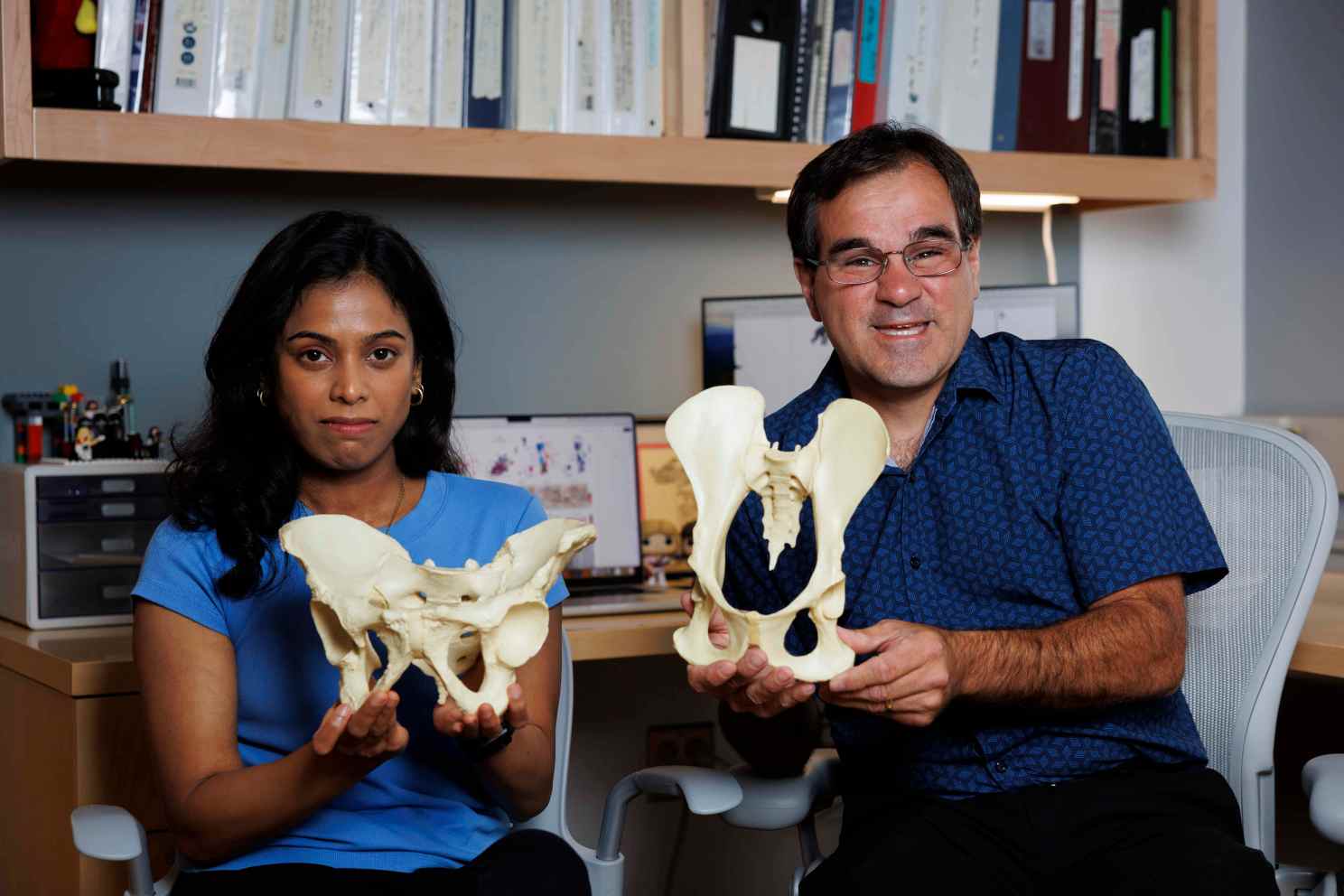

Photos by Niles Singer/Harvard Staff Photographer

Claims of pure bloodlines? Ancestral homelands? DNA science says no.

Geneticist explains analyses made possible by tech advances show human history to be one of mixing, movement, displacement

Alvin Powell

Harvard Staff Writer

Human history is rife with contentions about the purity (and superiority) of the bloodlines of one group over another and claims over ancestral homelands.

More than a decade of work on ancient human DNA has upended it all.

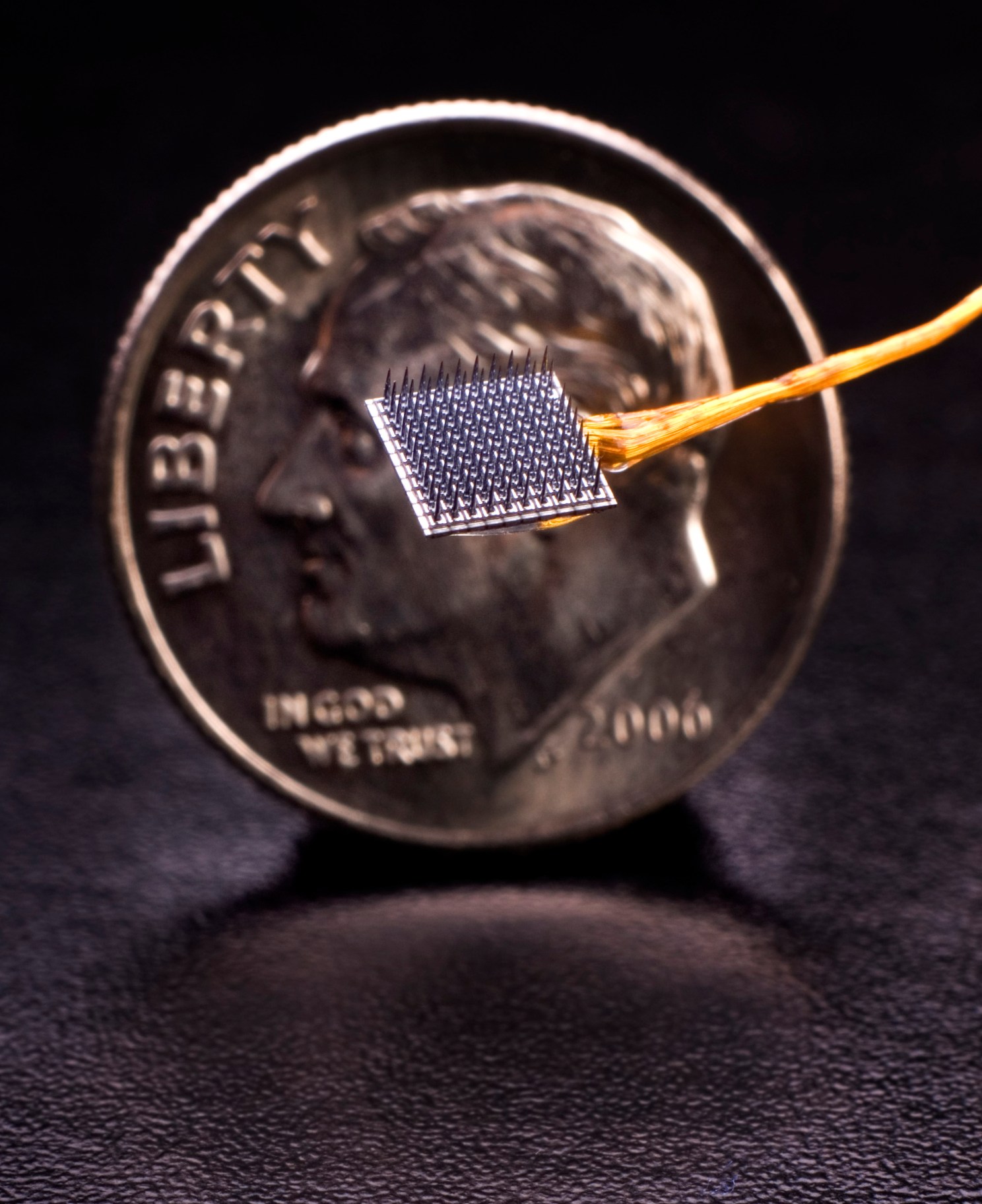

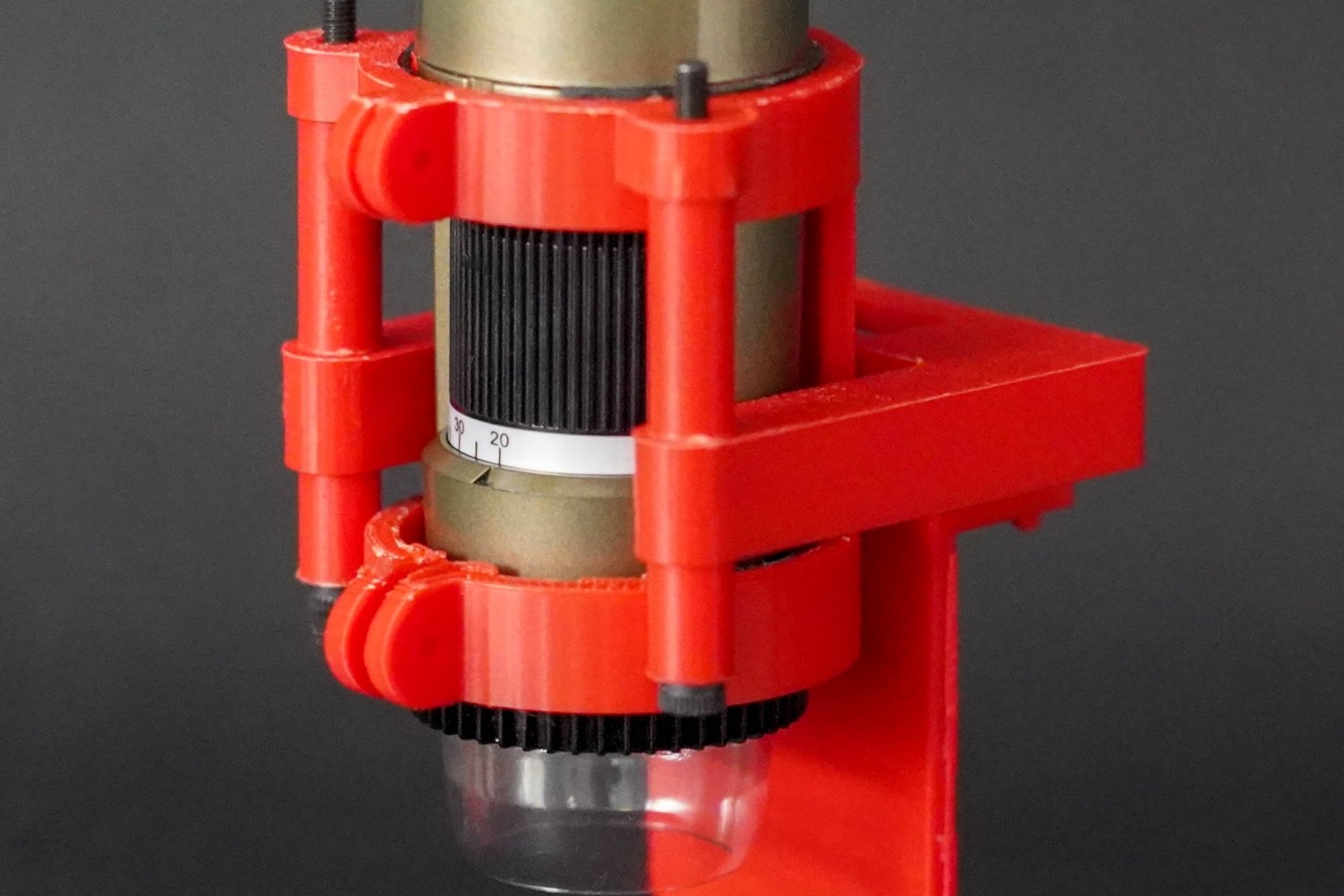

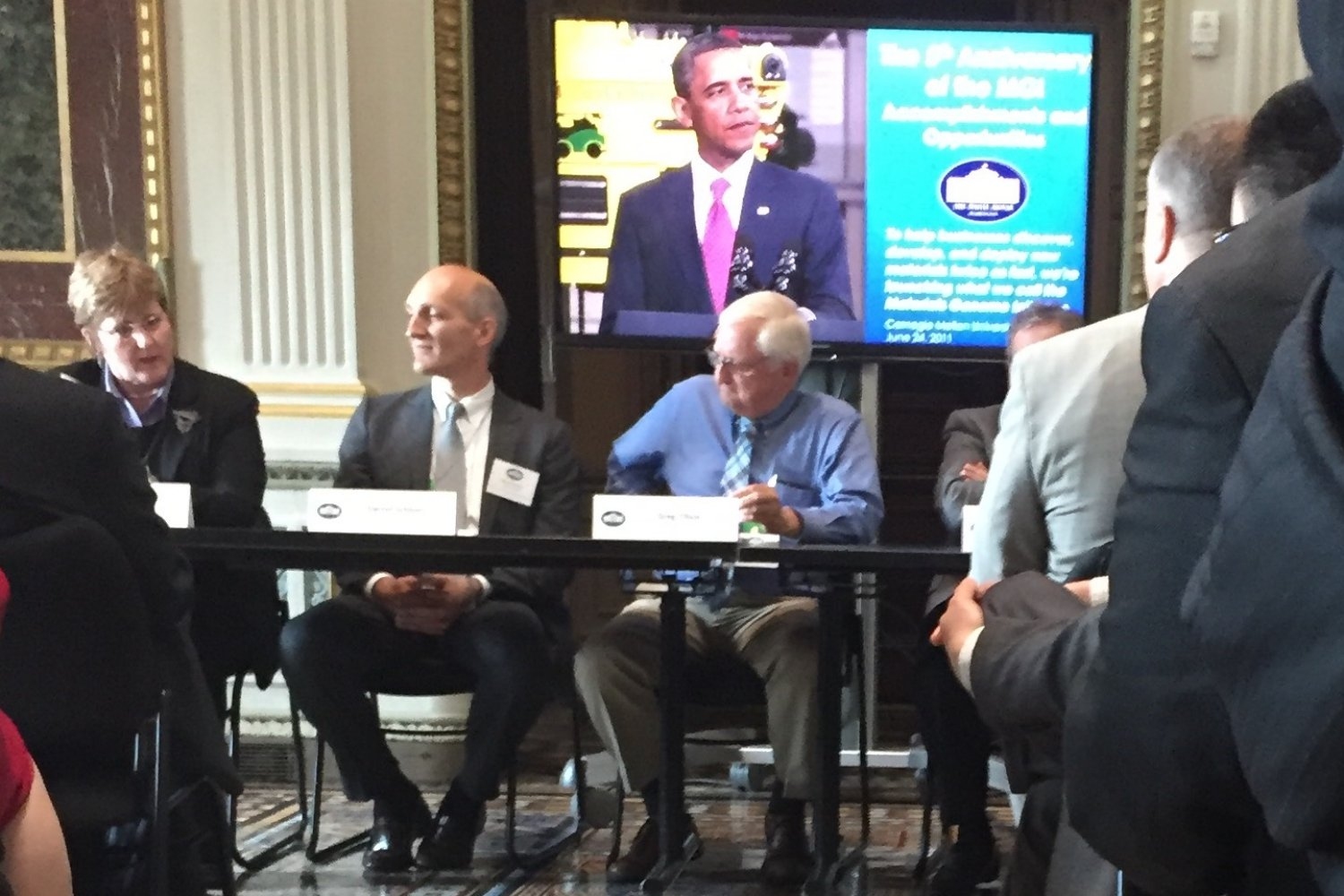

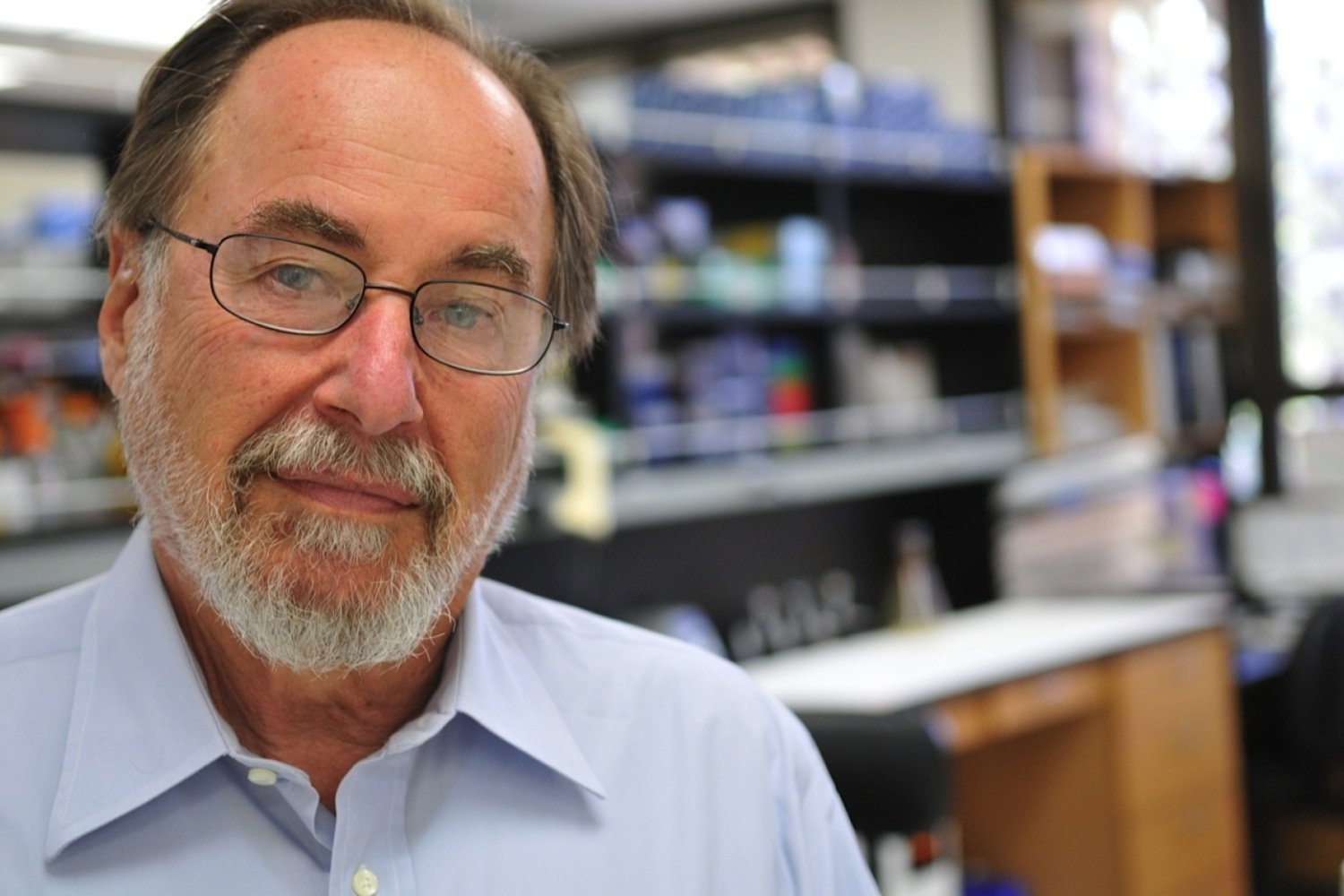

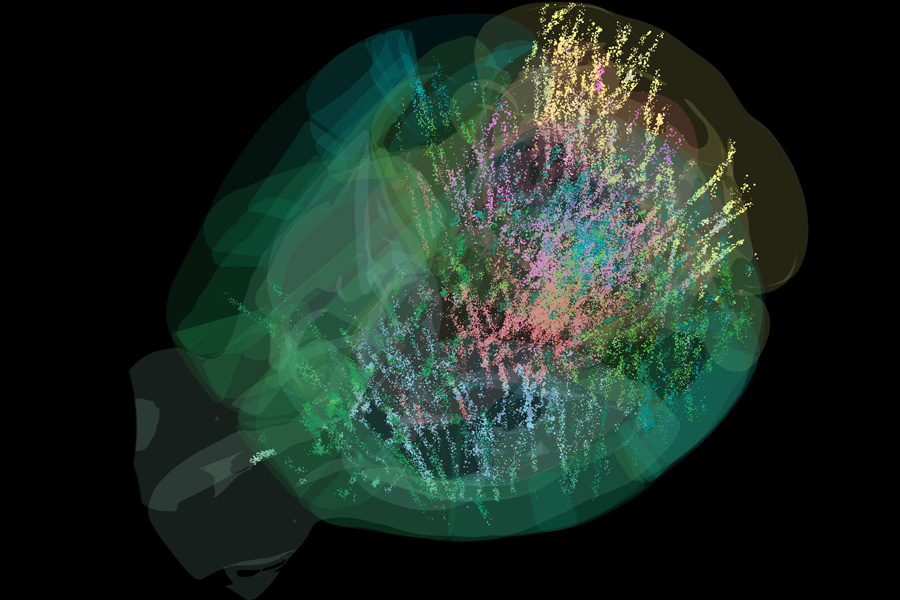

Instead, Harvard geneticist David Reich said on Monday, increasingly sophisticated analysis of genetic material made possible by technological advances shows that virtually everyone came from somewhere else, and everyone’s genetic background shows a mix from different waves of migration that washed over the globe.

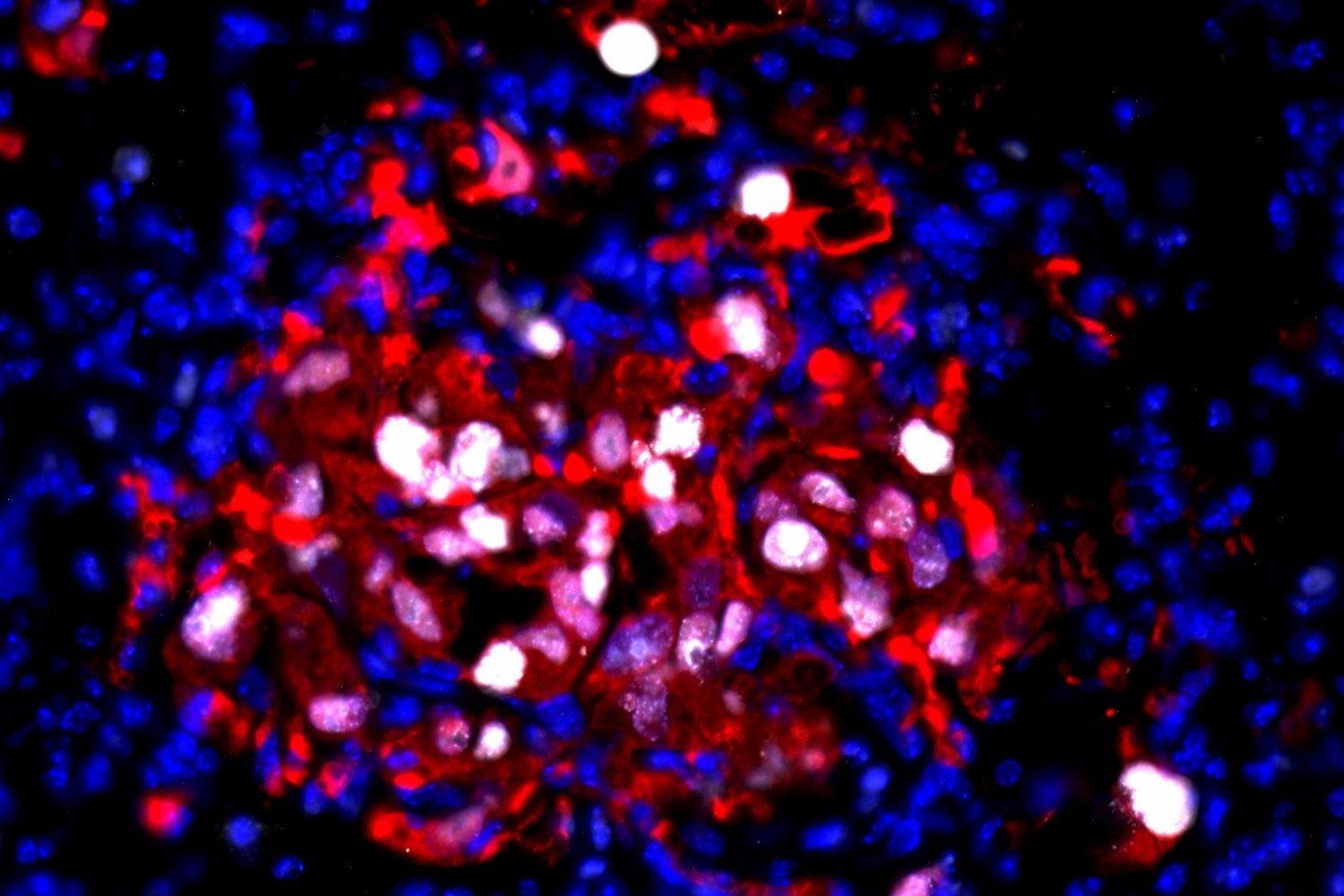

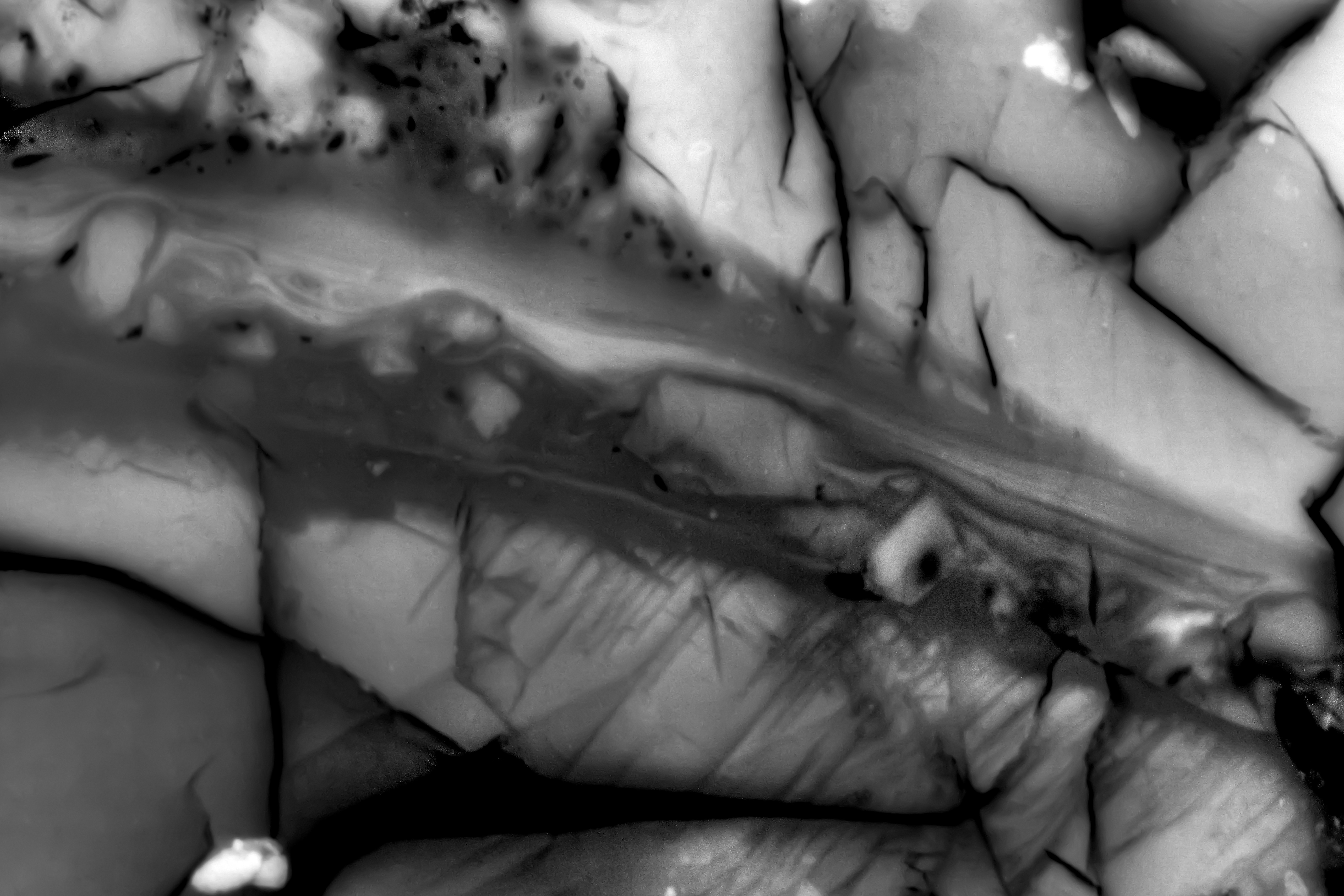

“Ancient DNA is able to peer into the past and to understand how people are related to each other and to people living today,” Reich said during a talk at the Radcliffe Institute for Advanced Study. “And what it shows is worlds we hadn’t imagined before. It’s very surprising.”

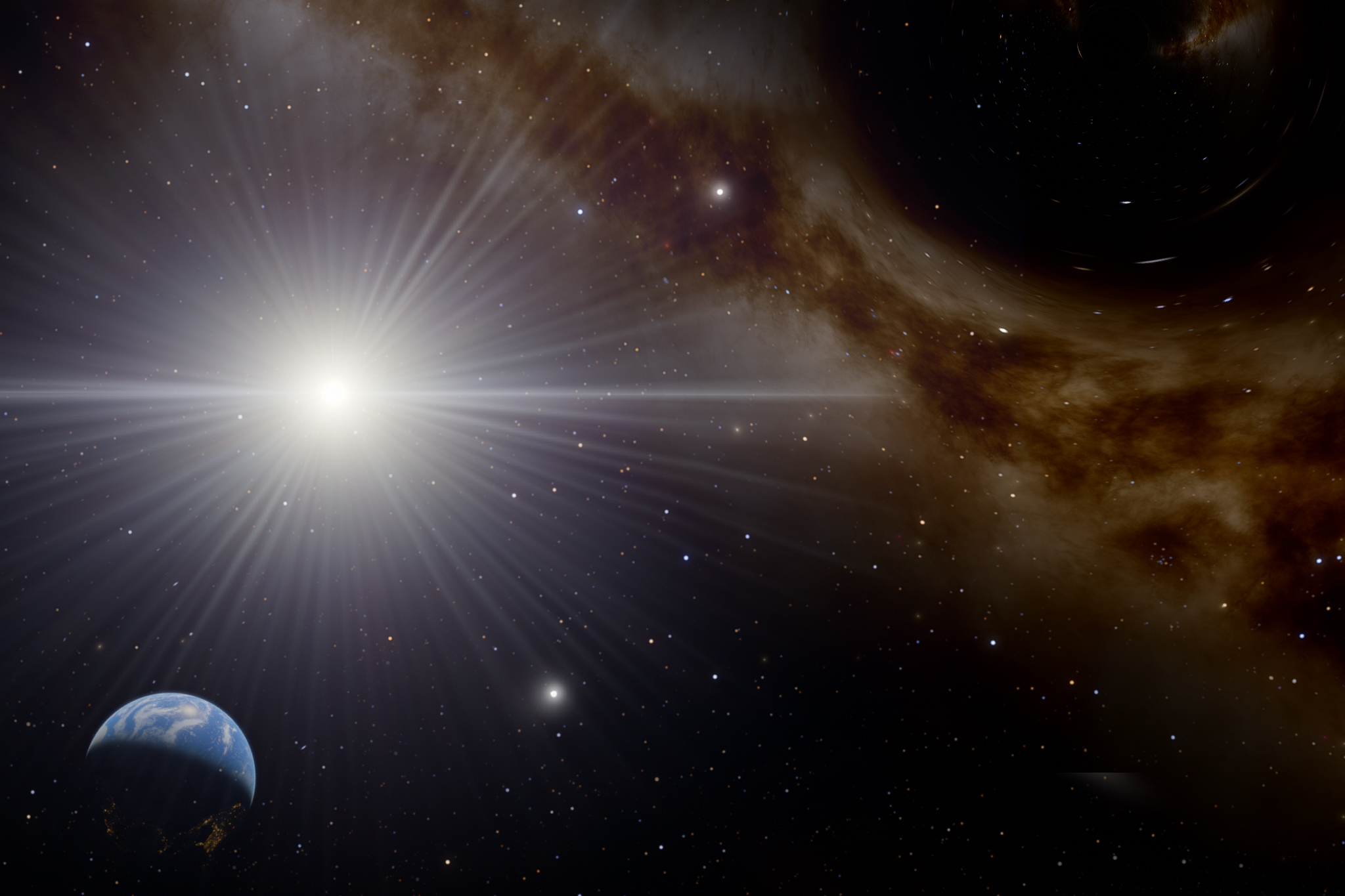

Human populations have been in flux for tens of thousands of years since our emergence from Africa. The details of the still-developing picture are complex, but the overall theme is one of increasing homogenization since human diversity fell from the time when modern humans lived next door to Neanderthals, two strains of Denisovans, and the diminutive Homo floresiensis of Indonesia.

Reich, a Harvard Medical School genetics professor, said that human diversity is lower today than it has been at any time in the past.

“Today, we’re very similar to each other. Even the most different people are at most maybe 200,000 years separated, with little gene flow,” Reich said. “But 70,000 years ago, there were at least five groups far more different from each other than any groups living today.”

Those themes are true even on the continent widely accepted as humanity’s birthplace.

In Africa, studies have shown that different tribal and language groups have moved over time, displacing others and mixing genetically. Cameroon, an area associated with Bantu languages, for example, was occupied by an entirely different people 3,000 to 8,000 years ago, Reich said.

Reich’s own career traces the arc of the emerging discipline of using ancient DNA analysis to better understand humanity’s past.

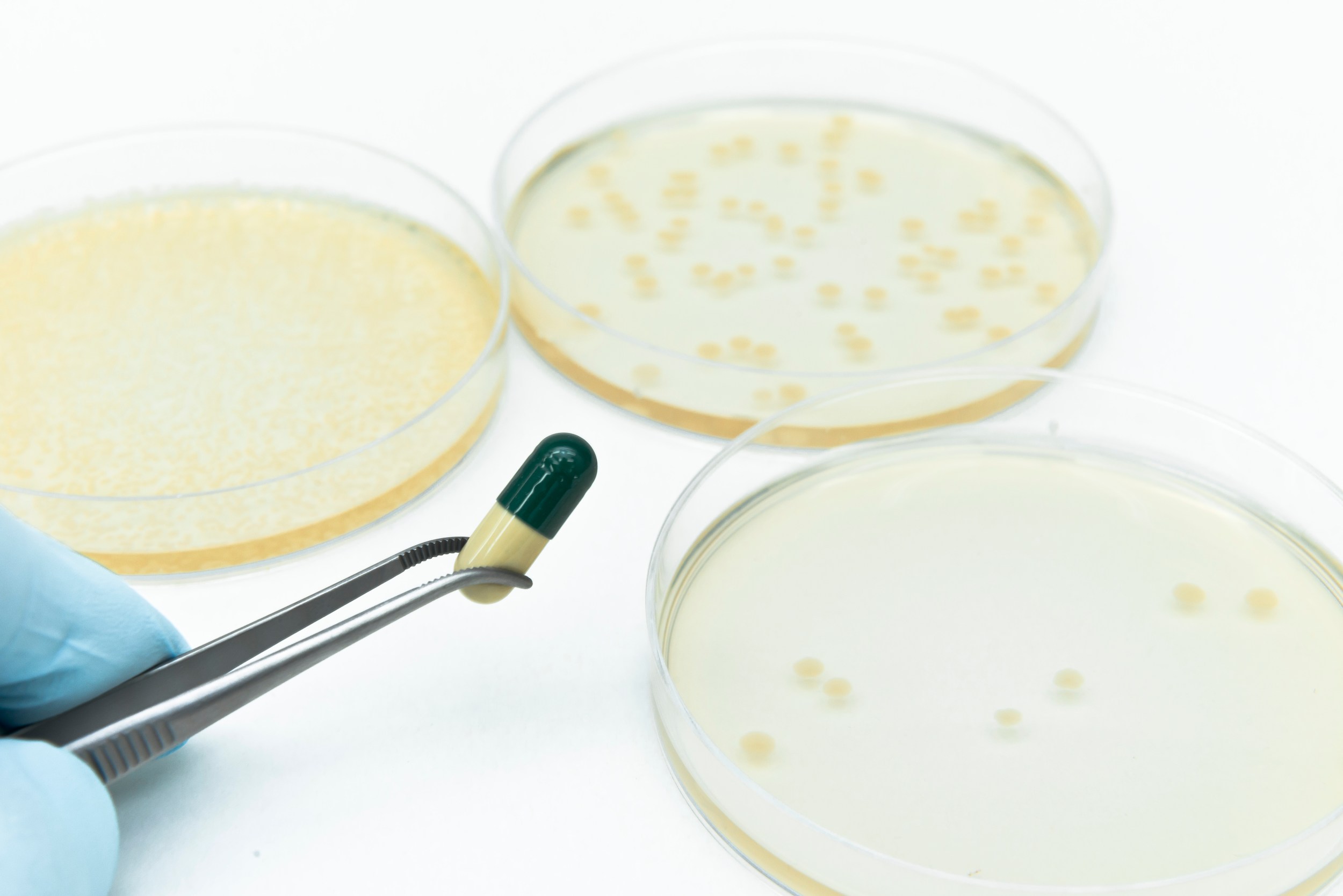

In 2007, Svante Pääbo reported he’d gotten DNA from Neanderthal bones. Reich at the time was an assistant professor studying prostate cancer at HMS and immediately viewed the new data as the most important in the world for understanding who we are and where we came from.

When Pääbo put together an international collaboration to analyze the data and better understand the Neanderthal-human relationship, Reich signed on, spending the next seven years analyzing the data in what he called his “second postdoc.”

“It’s just such amazing, exciting, like holy data. It’s very special,” Reich said. “And I think that was felt by all of the people in our international collaborations.”

Before this study, geneticists had convinced themselves that humans and Neanderthals had never mixed, but the analysis concluded they had.

Reich said he was “incredulous” as the results emerged. The research team assumed the result was in error and kept trying to find reasons that would negate it.

Eventually, though, they accepted the result, finding that Neanderthals and modern humans interbred and that most modern Europeans have about 2 percent Neanderthal ancestry.

“People’s stories about their history are almost always wrong. I don’t think that’s a bad thing. I think that’s a good thing and a humbling thing to be made aware of.”

Since then, it’s become apparent that modern humans mixed with another early human species, the Denisovans, whose remains were found in a cave in Siberia and are now believed to have lived broadly in Asia.

Their genetic fingerprints are strongest among those in east Asia and the islands of the Pacific.

“It’s clear modern and archaic humans mixed everywhere they met,” Reich said. “It’s not a rare thing for people to mix with people who are quite different from them. It’s in fact the rule.”

And humans moved around a good bit.

Neanderthal genes have been detected in east Asians, even though Neanderthals lived in Europe and west Asia. The prevailing view before the advent of ancient DNA was that technology and language moved due more to cultural exchange than to actual migration.

Now it looks as if groups of hunter-gatherers lived in Europe before farmers arrived, bringing agriculture as well as their genetic imprint.

Later, 5,000 to 6,000 years ago, mobile pastoralists called the Yamnaya from the Asian steppe arrived, leaving a large genetic impact — 75 percent in Germany and 90 percent in Britain — and possibly bringing with them the Indo-European language that diversified into European languages.

In the Iberian peninsula — modern Spain and Portugal — the Yamnaya genetic impact is smaller than in Germany and Britain, about 40 percent, but the Y chromosome of the first farmers who preceded them is entirely absent in the population, a sign of something that “can’t have been a happy occasion for the men involved,” Reich said.

“The local male population completely failed to transmit its Y-chromosomes to the subsequent population,” Reich said. “How that happened, we don’t know, but several thousand years later, the descendants of these Iberians came to the Americas and exactly this happened. People in Colombia have almost no local Y-chromosomes. They’re almost all European. And they have almost no European mitochondrial DNAs (inherited through the mother). They’re almost all Native American. And that’s the result of exploitation and social inequality. And perhaps that’s what occurred here.”

“The big perspective change from ancient DNA study is that people living today are almost never the descendants of the people in the same place thousands of years before.”

But ancient DNA doesn’t rule out culture change occurring without mixing. Carthaginians were long associated with sea-faring Phoenicians, but ancient DNA shows them more closely related to the Greeks with whom they competed economically.

“The big perspective change from ancient DNA study is that people living today are almost never the descendants of the people in the same place thousands of years before,” Reich said. “Human movements have occurred at multiple timescales, often disruptive to the populations that experience them, and these patterns were not possible to predict and anticipate without direct data.”

Another major revelation provided by ancient DNA is the impact of evolutionary natural selection on human populations.

Reich said it had been believed that natural selection was minimal among humans for the last 10,000 years, and his own early study, in 2015, showed just a dozen places in the genome that had changed over the last 8,000 years in a way that might indicate natural selection.

Last year, a similar study showed 21. So Reich and his team set out to develop ways to reduce false signals and increase statistical power. In an examination of 10,000 people’s genomes, they found almost 500 significant changes.

Reich now believes Europe, at least, is in a period of accelerated selection that began 5,000 years ago, focusing on immune and metabolic traits. Some traits show up in the DNA record as rising over time and then plummeting, as with genes that make one prone to celiac disease and a severe form of tuberculosis.

Reich said it is unknown why they rose in the population in the first place, but likely they conferred some unknown advantage before disadvantages related to disease outweighed them.

“People’s stories about their history are almost always wrong,” Reich said. “I don’t think that’s a bad thing. I think that’s a good thing and a humbling thing to be made aware of.”

More like this

-

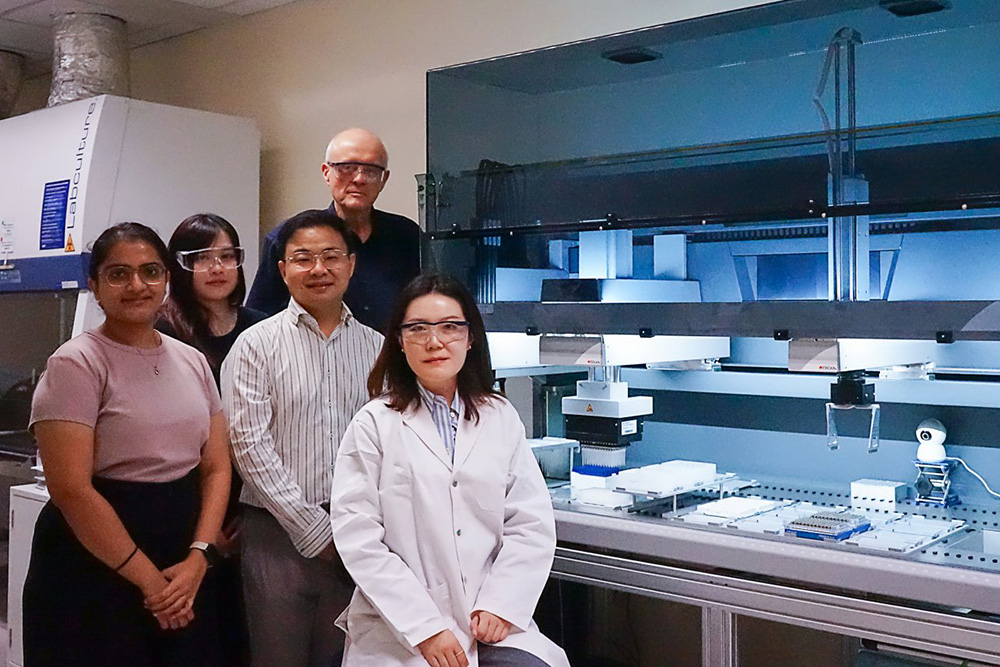

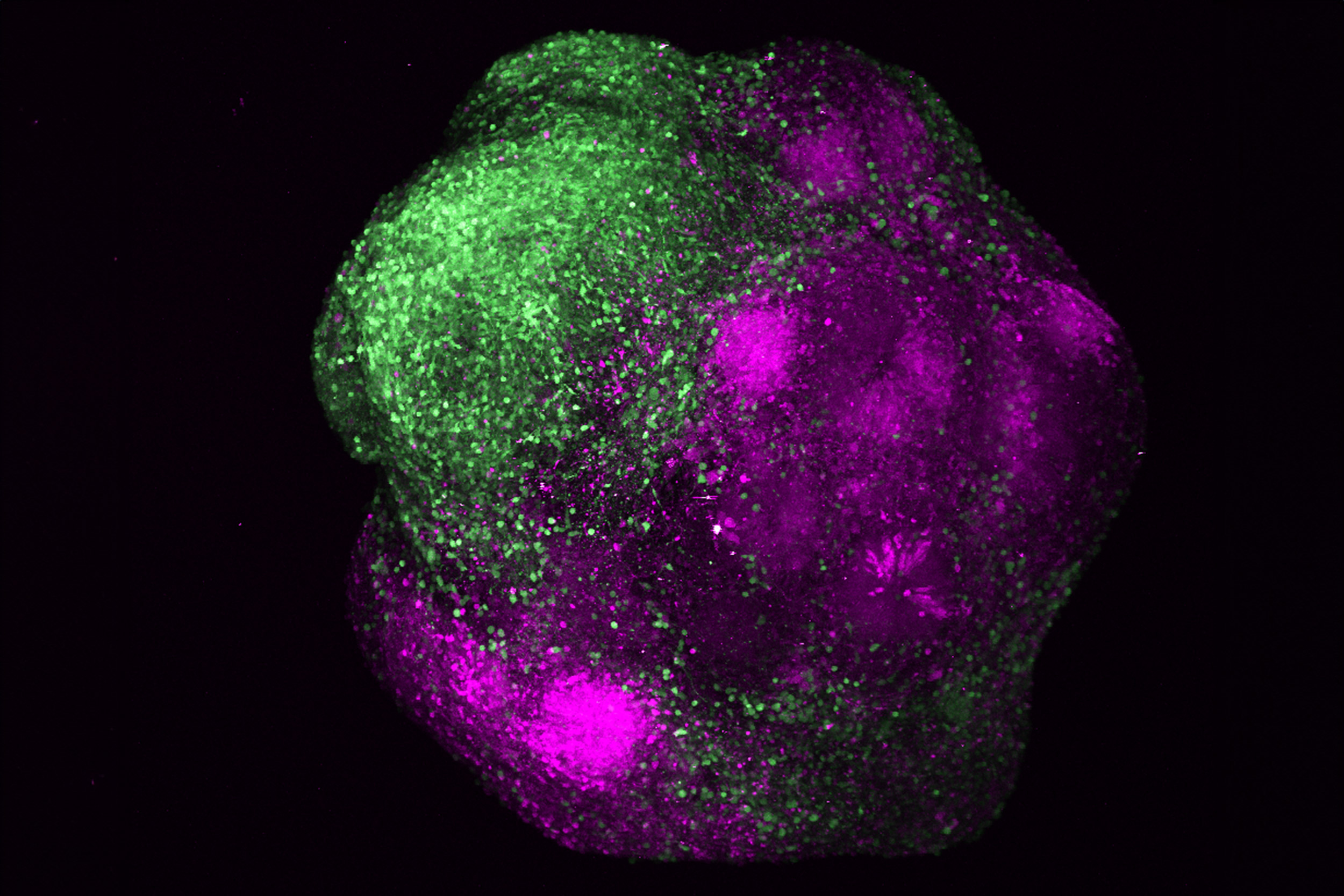

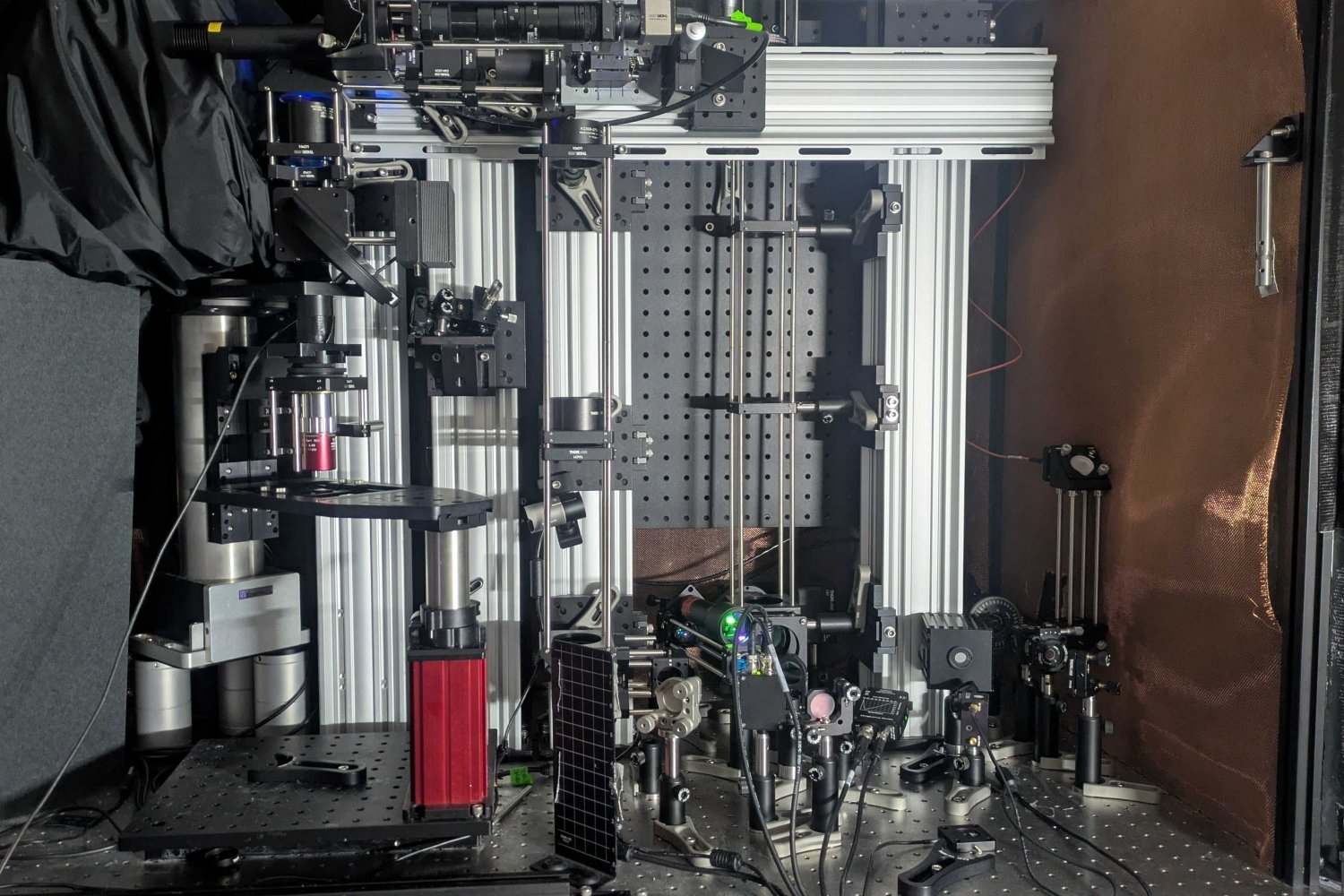

His lab’s ancient DNA studies are rewriting human history

Yet federal funding cuts have put next chapter of David Reich’s work in doubt